Computing: Common 'data structure' revamped to work with multi-core chips.

Every undergraduate computer-science major takes a course on data structures, which describes different ways of organizing data in a computer's memory. Every data structure has its own advantages: Some are good for fast retrieval, some for efficient search, some for quick insertions and deletions, and so on. Scientists have now developed a new way of implementing priority queues that lets them keep pace with the addition of new cores. In simulations, algorithms using their data structure continued to demonstrate performance improvement with the addition of new cores, up to a total of 80 cores.

Every undergraduate computer-science major takes a course on data structures, which describes different ways of organizing data in a computer's memory. Every data structure has its own advantages: Some are good for fast retrieval, some for efficient search, some for quick insertions and deletions, and so on.

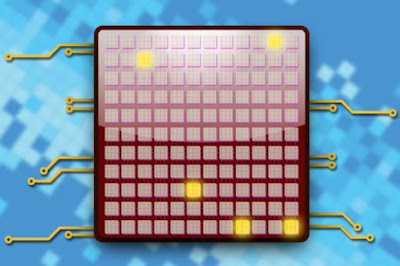

Today, hardware manufacturers are making computer chips faster by giving them more cores, or processing units. But while some data structures are well adapted to multicore computing, others are not. In principle, doubling the number of cores should double the efficiency of a computation. With algorithms that use a common data structure called a priority queue, that's been true for up to about eight cores -- but adding any more cores actually, causes performance to plummet.

At the Association for Computing Machinery's Symposium on Principles and Practice of Parallel Programming in February, researchers from MIT's Computer Science and Artificial Intelligence Laboratory will describe a new way of implementing priority queues that lets them keep pace with the addition of new cores. In simulations, algorithms using their data structure continued to demonstrate performance improvement with the addition of new cores, up to a total of 80 cores.

A priority queue is a data structure that, as its name might suggest, sequences data items according to priorities assigned them when they're stored. At any given time, only the item at the front of the queue -- the highest-priority item -- can be retrieved. Priority queues are central to the standard algorithms for finding the shortest path across a network and for simulating events, and they've been used for a host of other applications, from data compression to network scheduling.

With multicore systems, however, conflicts arise when multiple cores try to access the front of a priority queue at the same time. The problem is compounded by modern chips' reliance on caches -- high-speed memory banks where cores store local copies of frequently used data.

"As you're reading the front of the queue, the whole front of the queue will be in your cache," says Justin Kopinsky, an MIT graduate student in electrical engineering and computer science and one of the new paper's co-authors. "All of these guys try to put the first element in their cache and then do a bunch of stuff with it, but then somebody writes to it, and it invalidates everybody else's cache. And this is like an order-of-magnitude slowdown -- maybe multiple orders of magnitude."

Comments

Post a Comment